When science and technology were just beginning to sprout, scientists Blaise Pascal and Von Leibniz thought that one day they could achieve artificial intelligence. That is, let the machine have the same intelligence as people.

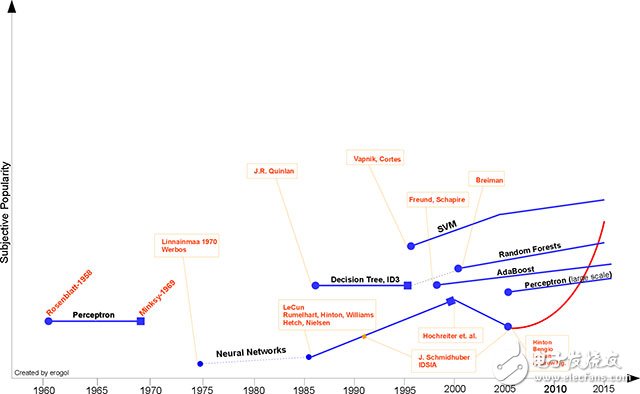

Machine learning is an important development line in AI, and it is extremely popular in industry and academia. Enterprises and universities are investing a lot of resources in research on machine learning. Recently, machine learning has made significant progress on many tasks, reaching or surpassing human levels (for example, the identification of traffic signs [1], ML reached 98.98%, has surpassed humans). Figure 1 shows a rough timeline for ML, marking many milestones. Familiar with the picture, reading the following will feel a lot smoother.

Figure 1 Machine learning timeline

The first helmsman to promote the popularity of machine learning was Hebb. In 1949 he proposed the neuropsychological learning paradigm, Hebbian learning theory. After a simple extension, the theory begins to study the correlation between the nodes of the recurrent neural network. It records the commonality on the network and then works like memory. The formal expression is this:

It is assumed that the persistence or reproducibility of reflex activity can induce changes in cells to adapt to this activity... When neuronal cell A is close enough to neuronal cell B, it can continue to repeatedly activate B, then the two cells One or all of the metabolic processes or growth changes will occur to improve efficiency [1].

In 1952, IBM's Arthur Samuel wrote the chess program, which learns an implicit model from the board state to give a better move for the next step. After Samuel and the program played in multiple games, I felt that this program can reach a very high level after a certain period of study.

With this program, Samual refuted the machine and can't learn the pattern above the explicit code like humans. He defined and explained a new word - machine learning.

Machine learning is an area in which a computer can gain its capabilities without explicit programming.In 1957, Rosenblatt's perceptron algorithm was the second machine learning model with a background in neuroscience, which is quite similar to today's ML model. At the time, the emergence of the perceptron caused quite a stir because it was easier to implement than Hebbian's idea. Rosenblatt explains the perceptron algorithm in the following words:

The role of the perceptron algorithm is to illustrate some basic features of the general intelligent system without understanding the unknown conditions that are only valid for some specific biological organisms [2].

Three years later, Widrow [4] was loaded into the ML history book by inventing the Delta learning rules. This rule was applied very well to the training of the sensor. Yes, yes, it is the common least squares problem. The marriage of the perceptron and the Delta learning rules constructs an excellent linear classifier. However, according to the historical law of the wave before the death, the heat of the sensor was destroyed by Minskey [3] in a cold water in 1969. He proposed the famous XOR problem and demonstrated the powerlessness of the perceptron in linear indivisible data similar to the XOR problem. For the neural network (NN) community, a gap that was almost insurmountable at the time was formed, and the history was called "Minsky's Seal." However, in the 1880s of the 10th century, NN scholars broke this spell.

Figure 2 XOR problem - linear indivisible data example

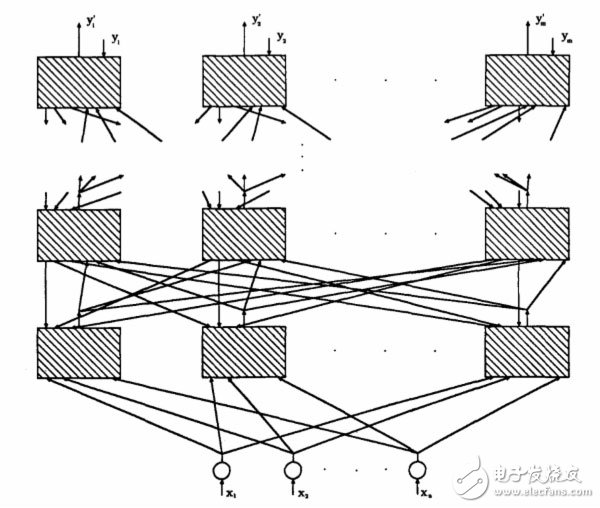

After being sealed, the development of ML was almost stagnant. Although BP's thought was proposed by Linnainmaa [5] in the 1970s as "automatic differential flipping mode", it was not applied to multi-layer perception by Werbos [6] until 1981. (MLP), until now is still a key component of the neural network architecture. The emergence of multi-layer perceptron and BP algorithm contributed to the development of the second neural network. From 1985 to 1986, NN researchers successfully implemented a practical BP algorithm to train MLP. (Rumelhart, Hinton, Williams [7]- Hetch, Nielsen [8])

Figure 3 from Hetch and Nielsen

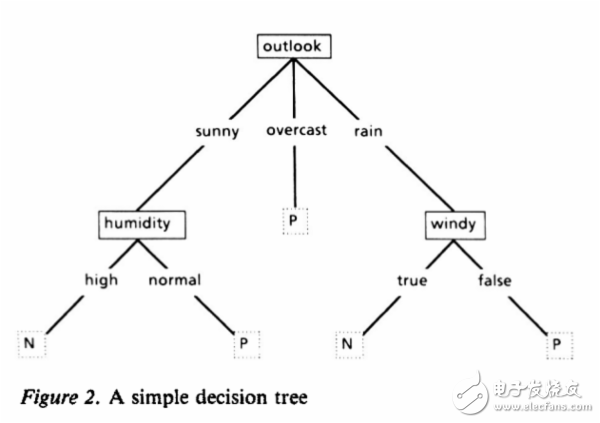

The flowers bloom and sway, each table has a branch. Another equally well-known ML algorithm was proposed by JR Quinlan [9] in 1986, namely the decision tree algorithm, specifically the ID3 algorithm. This is another beacon-like achievement in another mainstream of machine learning. ID3 solves many real-world problems with its simple rules and clear reasoning. In fact, it appears in the form of a practical software, compared to the black box-like NN algorithm.

After ID3, many other algorithms or improvements have sprung up, such as ID4, regression tree, CART, etc.). Until now, decision trees are still a hot spot in the ML world.

Figure 4 A simple decision tree

Next is one of the most important breakthroughs in the ML space, the Support Vector Machine (SVM). The SVM was proposed by the master Vapnik and Cortes [10] in 1995, and it has strong theoretical arguments and empirical results. Since then, the ML community has divided the Chu River Han Dynasty into two groups, NN and SVM. Around 2000, with the introduction of the nuclear method, SVM has the upper hand and surpassed the NN model in many fields. In addition, SVM has developed a series of basic theories for NN models, including convex optimization, generalization interval theory and nuclear methods. It can be said that during this period, the development of SVM is in the best interests of both theory and practice, and thus the development speed is extremely fast.

![Figure 5 From Vapnik and Cortes [10]](http://i.bosscdn.com/blog/1S/10/1R/45_0.png)

Figure 5 From Vapnik and Cortes [10]

Water-cooled capacitor is supercapacitor is a capacitor with a capacity of thousands of farads.According to the principle of capacitor, capacitance depends on the distance between the electrode and electrode surface area, in order to get such a large capacitance, as far as possible to narrow the distance between the super capacitor electrode, electrode surface area increased, therefore, through the theory of electric double layer and porous activated carbon electrode.

Water-Cooled Capacitor,Water-Cooled Power Capacitor,Water-Cooled Electric Heat Capacitor,Water-Cooled Electric Heating Capacitor

YANGZHOU POSITIONING TECH CO., LTD. , https://www.yzpst.com