Many people say that Google is imitating Apple, but his imitation is a follow-up or strategy, we don't know. However, in the choice of AI or AR, it is a different path. This is the result of careful deliberation! Different choices don't know what results will be brought to them? We will wait and see.

Recently, Apple and Google have held a new product launch. The products at the press conference have aroused great interest from the onlookers. Let us briefly review them here.

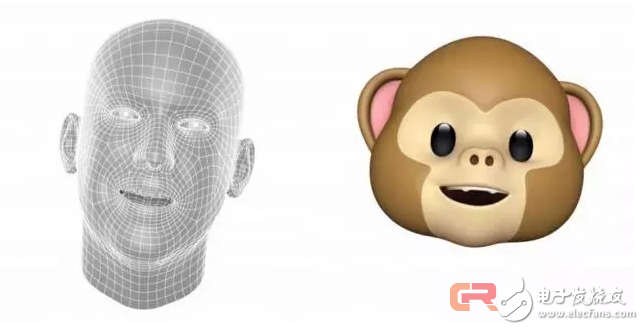

At the Apple conference, the highlight was clearly the new mobile phone series, especially the iPhone X. The theme around Apple's new phone is AR. At the press conference, Apple's VP Phil Schiller commented that the iPhone X is "the first smartphone that is really built for AR." On the hardware, Apple's iPhone X is equipped with a revolutionary structured light depth detection camera in front of it. High-speed 3D capture of the face. At the same time, the refresh rate of the conventional RGB camera is as high as 60fps, and the new gyroscope and accelerometer can achieve accurate motion tracking. The A11 chip inside the phone integrates a powerful GPU and neural engine acceleration module to perform high-performance algorithms related to computer vision in AR applications. In software, Apple's AR highlight is Animoji, which can track user's expression in real time. This idea was first proposed by Sanpchat. However, Snapchat users are mainly young people, so the limitations of user groups have become the bottleneck of promotion. At present, Apple intends to put it into the iMessage application, so that more people can access the AR in the conventional application. It can be said that it is a big step on the road of popularizing AR. In addition, Apple also showed the AR game "The Machines", "Warhammer40K: Free Blade", an app "Skyguide" for superimposing constellation information on the real starry sky and for superimposing player related information in baseball games. AR applications such as "At Bat" to the real scene.

Apple's Animoji is an important attempt to promote AR on a large scale

Google’s press conference is still around the old routine, “AI First,†and the technology that runs through the audience is GoogleAssistant. First released the Google Home three-piece smart speaker, in addition to playing music with GoogleAssistant voice control, you can also control the Nest series of smart homes in real time. Later released is the Pixel 2 series mobile phone and PixelBook. The selling point is still using the artificial intelligence assistant Google Assistant. For example, the Pixel 2 mobile phone can realize the use of voice control mobile phone to shoot. The final accessories section released a Bluetooth headset Pixel Buds that can be used for real-time voice translation and a camera clip that can be automatically shot based on artificial intelligence. These two gadgets are very fun, and they are also screened in the circle of friends.

Google Assistant is Google’s key technology for all products

Apple's bottom-up and Google's top-down strategy

The same technology giant, why Apple chose AR, and Google chose AI? All in all, the reason is that Apple is ultimately a consumer electronics manufacturer. Products must be closely related to the user experience. Therefore, AR is closely related to the user experience as a breakthrough point. Google starts from enterprise applications, has AI technology advantages, and launches consumption. Electronic products are artificial intelligence services that hope to attract more users to use it as an entry, so everything around AI is justifiable.

First take a look at Apple and AR. The essence of AR is a new human-computer interaction and information acquisition mode, which can be considered as a revolution in the media. The last media revolution was the mobile media introduced by smartphones, and the last media revolution was the multimedia PC. Interestingly, in the two media revolutions of PCs and smartphones, Apple played a very important role (Macintosh and iPhone), so Apple can clearly see that AR is the next revolution in user experience, and Apple has Confidence and ability to be the leader of the revolution. Once AR is really popular, the market is huge, especially for AR virtual content, and the money is very fast. Overseas, PokemonGo is a pioneer in AR games, with revenues approaching $1 billion in the second half of 2016. In China, the glory of the king can earn 150 million yuan a day. It is conceivable that when AR technology matures, the revenue of its virtual content will be much larger than these two numbers, and Apple's ambition is to set up the underlying platform (hardware device, software underlying framework, App Store developer ecology, etc.). Then you can use the upper ecosystem based on its underlying platform to make money. Therefore, Apple's strategy can be said to be bottom-up, bottom-up. iPhone X and ARKit released earlier are a serious attempt by Apple in the AR ecosystem. On the one hand, AI is an important technology for Apple. If it can enhance the user experience, Apple will use AI technology without any reservations, such as artificial intelligence-based Face ID on iPhone X and the previous artificial intelligence voice assistant Siri. However, AI is just a basic technology for Apple. Just like 4G or WiFi, it can become an important part of the product but it won't be its theme. To be the theme, the technology must be able to change the user experience subversively. Such as the Touch Screen 10 years ago, or today's AR.

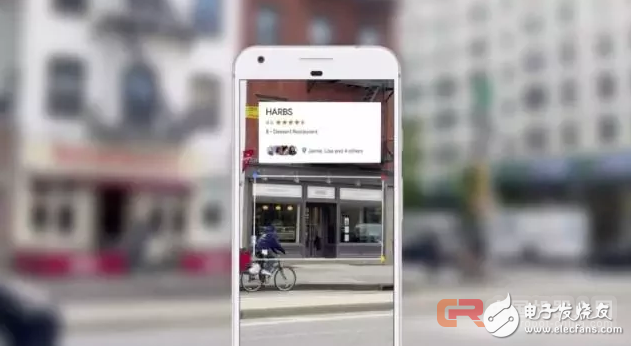

Google is different from Apple. Its main business is in the enterprise market. Its AI-based upper-layer strategy has already been determined. This time, launching a series of products in the consumer electronics field is a new battlefield for AI. Throughout its release of products, Google's unified user portal is GoogleAssistant, and Google Assistant is ultimately Google's artificial intelligence service in the cloud, so the hardware released by Google is actually a new entry for its AI services. In this respect, Google's strategy can be said to be top-down top-down: first top-level services and software, then release the underlying hardware. Of course, Google does not exclude AR applications. It released PorjectTango as an experimental product of AR a few years ago. It also released ARCore this year, intending to compete with Apple's ARKit on the Android mobile platform. At the press conference, Google also released some AR applications, the most interesting of which is GoogleLens, which can use artificial intelligence to overlay relevant information on images taken by users. As mentioned earlier, AR allows virtual content and real content to be superimposed to achieve new ways of obtaining information, and GoogleLens is a model for combining AI with AR.

Google Lens can give information about the image content and overlay it on the image. It can be said that it is a model of AI and AR.

The dilemma of Google and other artificial intelligence products: need a legendary product manager to put AI technology to the ground

Google announced so many products at the press conference, its technology is not strong, but these new products from the product point of view, there are too many debatable places. First of all, Pixel Buds, this is the product that is most popularized by "Black Technology". The highlight is that simultaneous interpretation can be achieved. However, where is the application scenario? Everyone in the country speaks a language, of course, does not need this; if you go abroad, most people now speak English, and the use is not great. If you really need it, you still need to connect to the Internet and both parties can use Pixel Buds to truly communicate. It seems that there are not too many people who really need it. The problem it is trying to solve is likely to be a pseudo-demand at present.

The technology of Pixel Buds looks cool, but there are many restrictions on its use. Real-time translation is probably a pseudo-requirement.

Another feature that looks great is the "playing the music that is playing in the surrounding environment on the lock screen" on Pixel 2. It looks so cool at first glance, but think about it, you need to turn on the microphone at any time to achieve this function. It is also necessary to pass the music clips to the cloud for discriminating. It can be said that both the power consumption and the traffic are consumed, and the music information played around is acceptable for most users, and it is icing on the cake, once the battery consumption and data are needed. At the cost of traffic, I think most people choose to turn this feature off.

The Pixel 2 phone can recognize the music playing around at any time, it looks beautiful, but will you use this function if you need to consume extra power and network traffic?

Many other products that Google released at the press conference, such as Clips for auto-photographing, etc., have more or less such tricks than practical ones. But if you don't solve the practical problem, these products will eventually become the toys of geeks, and you can't convince the public to buy wallets. Google's previous Google Glass is an example of this, the technology is very advanced, the concept is very advanced, but no one buys. In fact, not only Google's products are like this, but also the artificial intelligence consumer electronics of other companies' products have similar problems, that is, holding gimmicks (technical) to find nails (applications). Artificial intelligence seems to have a good outlook, but it is still unclear which consumer applications can be used. Smart speakers look like some eyes, but now it is a hundred boxes of war to become a red sea, more importantly, the market capacity of smart speakers is too small compared to many AI ambitious ambitions, it is necessary to find a better The place where the application falls.

Taking history as a mirror, the current AI era is very much like the Internet bubble era of the beginning of this century. Everyone knows that this is the future, but how to make profits while burning money to seize the market becomes a big problem. At that time, the profit model of portals, such as advertising, Mailbox charges and so on later found that it is not very reliable, and finally Sina is through the SMS service to tide over the difficulties and Netease is back to life by the game. In addition, the final winners of the Internet era are Google, Facebook and other rising stars. In the bubble period, AOL, Hotmail, Yahoo, etc., all died in the end, or were acquired by other companies. Then, whether Google and other established companies can successfully find the killer application of AI, or will be killed on the beach by the rising star of AI era, let us wait and see.

A live streaming screen can be a projector for both android and iPhone mobiles.we use wired and wireless projection technology to transfer the screens between mobiles and lcd screens.external devices like loudspeaker microphone digital camera lightning and others can be connected with our USB interfaces,Also PC screen projection is available for our users,with the connection of HDIM transfer interface,when you use your mobiles and PC to start a live streaming,your screens display in our live streaming screen.with our big size screen,our users can see more clearly,enlarge the distance between users and visitors,a live streaming can be with more fun and more efficiently.

Live Broadcast Movable Big Screen,Live Streaming Euqipment Movable,Projection Screen Movable Live Streaming,Live Broadcast Screen Movable Equipment

Jumei Video(Shenzhen)Co.,Ltd , https://www.jmsxdisplay.com